Art Machine Prototyping with an AI Chatbot

Several years ago I led some summer camp programs and professional development workshops where we built “twisted turtles” or programmable art bots. These machines take inspiration from the classic logo turtles made by Seymour Papert and the MIT Media lab (as well modern updates) but use more rough materials in the construction and create more idiosyncratic patterns. I’ve been exploring around the edges of using AI tools in tinkering projects for the past couple of months and thought that this theme could be an interesting test case for the possibilities and challenges of incorporating chatbots in the tinkering process.

I wanted to write a reflection about the process to help me think about the pros and cons of working with a chatbot to make the robot. I feel a bit cautious about using AI tools both for my professional work (summarizing, writing drafts, etc) because I don’t want to offload creative work and curtail my own understanding. I feel skeptical about incorporating AI into the tinkering process because of a general assumption that it leads to less imaginative ideas. I’m also worried about the environmental impact, potential for inappropriate content and long-term risks, but I think that it’s important to try things out with an open mind and try to see the good and the bad in new technologies. Since I’m a novice programmer, I thought that using the AI tool as a coding helper could be a good way to investigate what the chatbot might be good at and where it can run into trouble.

I started with a pretty solid idea about what kind of twisted turtle I wanted to make. The design that I thought about would have four buttons corresponding to forward, right, back and left. I wanted to make it so that you could input a pattern by pressing the buttons and then have the drawing “playback” the pattern in a loop. I used the free version of ChatGPT (signed in) to collaborate on the robot and get some help with the programming. I originally though to use the makecode blocks (because that’s what I’m used to tinkering with but I realized early on that working off a text based coding platform like micropython would be much easier to iterate on alongside the AI chatbot.

I started by saying “I want to make a robot using the micro:bit” and the chatbot responded with some helpful questions asked for clarifications. I knew that it’s important to make sure all the physical components work at the beginning of a project so I asked the AI to make a test program for the four switches. I copied the code into the micropython website and pressed upload. There were a few small snags where the program didn’t work or showed error messages and I had to copy/paste the errors into the dialog (for example the AI initially connected to the servos in an unsupported way or just went along with my suggested pins without checking that those couldn’t be used because of the micro:bit display function. I also had to clarify that I was using continuous servos instead of positional servos which changed the code.

It felt pretty natural to chat with the AI and the responses were friendly and positive. It shared more information about the servos and programming but I normally wanted to just copy and past the updated code. It was helpful once the test program was basically working to be able to describe in loose language what was going wrong and get a quick fix. For example one time the buttons were always registering as being pressed and we needed to add the pull up resistors and another time the servos always moved a bit and the code needed to be adjusted to add a digital write signal to completely stop the motion.

Next I asked it to adjust the code, first so that the buttons would correspond to the movements by changing the direction of the motors and then to store a pattern based on button presses. We refined the pattern to have it store the sequence and even keep track of how long the buttons get pressed and have that be incorporated into the playback to make more customizable patterns. These are pretty advanced topics that would be normally pretty hard for me to understand or troubleshoot. The code went through several versions until we had the basic functionality (including displaying the F(orward), R(ight), B(ack), L(eft) on the screen and using the on board A and B buttons to either playback the pattern or stop and clear the sequence.

In a much shorter amount of time than I would usually take to look things up on educational coding sites and struggle with shoddy documentation on message boards, everything was working well and I wanted to start on the physical building. I asked the chatbot to help me design the robot and although it gave a few helpful tips like adding velcro to the battery pack so that it could be removed easily and just hot gluing parts to the white servo horns, I felt the description was a bit generic and vague. I prompted me to ask for it to create a sketch of the layout but what came out of that wasn’t really helpful. Maybe I could have gone deeper to refine its thinking on this but I though it would be easier to just put the chatbot aside and construct a model on my own.

After adding in all of the parts in a cardboard frame with servos mounted under the base, the breadboard, wires and micro:bit on the base level, a clothespin for the marker holder and some washers to balance the base. I added the buttons to the top layer in the orientation based on the display (without really checking to see how the motors were moving).

This created the biggest challenge for the chatGPT model to troubleshoot with me as I quickly noticed that the buttons were not making the robot move in the right way. For example when I pressed forward, the bot moved right and when I pressed back the robot moved left. The issue probably came from how I mounted the motors but since everything was glued together I decided to just ask the AI to reprogram the bot to match the physical construction. Here it had a lot of trouble switching the outputs and keeping track of all of the variables. There were several iterations where some parts were fixed but others changed. For some of the buttons the display was right but the movement was wrong and for others it was the other way around. In the end I ran out of free chatGPT messages and ended up finding the right place in the code and simply manually switching the servo motor numbers for the confused pair. I also had to rip off the hot glued button piece and rotate it 90 degrees so that the orientation made sense.

It was really interesting to see how the chatbot had no trouble quickly and accurately iterating on the code in the first part of the project where it didn’t need to have a sense of a physical design. When there was the combination of hardware attached to the bot in a specific way it got a bit stuck and ended up not able to correctly adjust the program. On one hand I was fine with this and it even gave me a bit more of a sense of accomplishment that I could fix something that the AI couldn’t figure out, but I can imagine that for someone with less experience with the materials it could have been very frustrating.

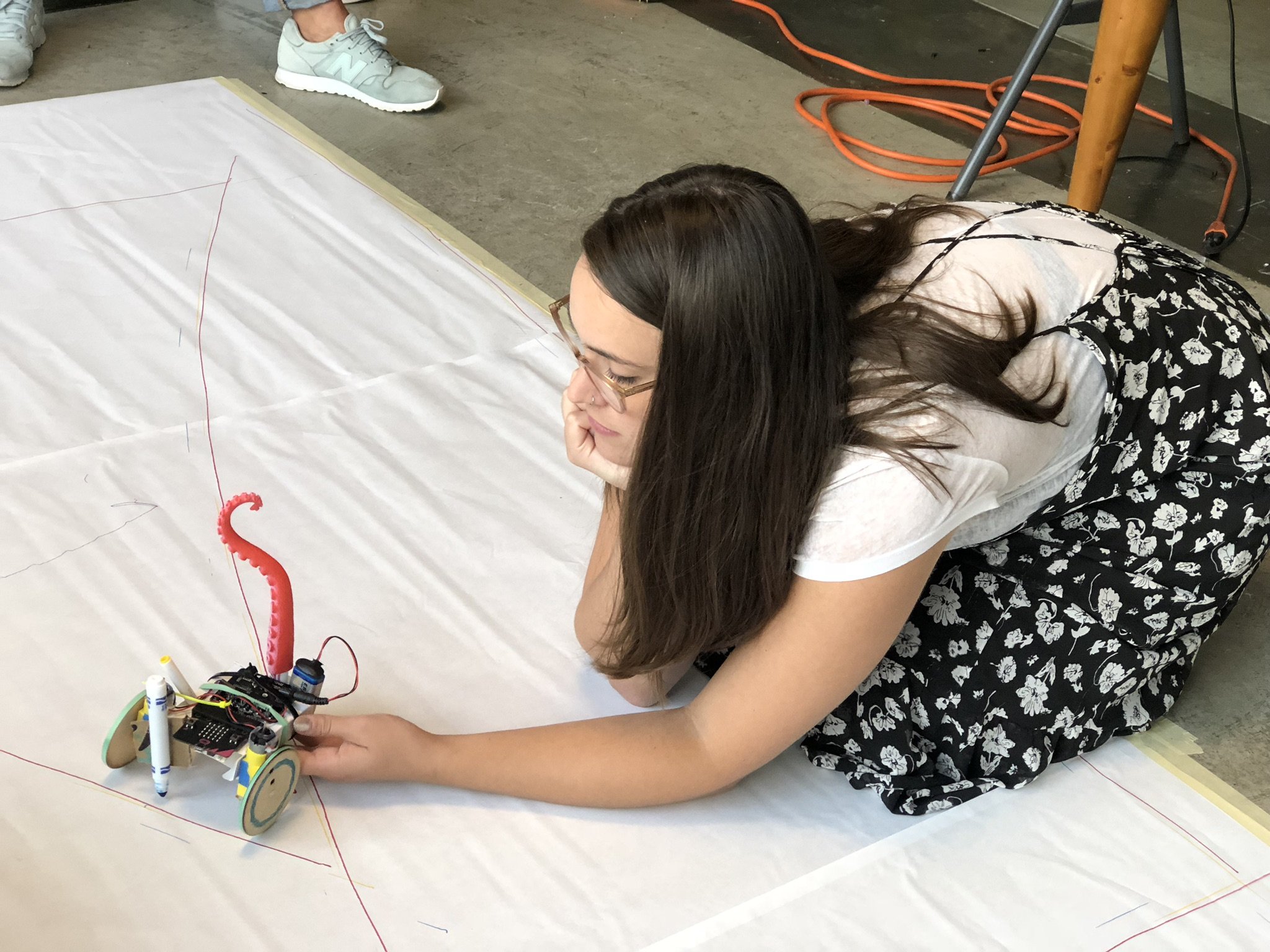

In the end the twisted turtle robot worked great and was really fun to play with. I love the way that it can repeat patterns and make drawings that range from chaotic to complex. There are a few things that I would refine on this rough draft and I would like to explore new directions like adding sounds, a pen up/pen down function or radio broadcast to have one controller and one bot but overall the process resulted it something that really matched my initial ideas.

As well as adjusting the code, it’s really fun to play around with the physical elements and getting the programming help from the AI chatbot let me get into that mode much more quickly. I did a couple small experiments to change the size of the wheels and test out different drawing mediums like water on the patio. For this type of complex project, once the programmed part gets finished I think there’s still a lot of tinkering problem space to explore.

In the end, I asked the AI to produce a summary of the process. I also asked it to write about what it did well and what it might do differently the next time. I think that this is actually pretty valuable as a record of the experiment. Usually after building a project, it takes a lot of work to write up a blog post or reflection. While I don’t think that the AI generated text would be so helpful out of context, if I looked back on both the chat log and the summary in six months from now it would be a good refresher to what I tried and what other ideas to explore.

In the end I think that some of the positive elements of the experience were the speed of iteration on code which would have been really hard for me to get to naturally. As well I was impressed with the “facilitation” of the chatbot and can see how the positive encouragement, on tap explanations and suggestions of what to try next (that I could easily reject) could be helpful to learners and tinkerers. I appreciated that because the project had a running dialog between me and the chatbot, in the the end there was a record of the process and I could ask the chatbot to reflect and summarize the experience. Working with the chatbot gives me the inspiration to try some hardware that I haven’t explored in the future (like arduino nano) because I am slightly intimidated by text-based coding and now have built a bit more confidence to shoot for more complex robotic creations.

Some of the negative aspects of the tinkering with chatGPT is that you lose a bit of the learning for how the programming works and the temptation to just copy paste without reading the explanation is high. I’m not so worried about that because with tinkering projects it’s more about getting an idea into the real world instead of learning specific concepts, but it still feels a bit creepy to have the AI “do it for you” with the coding. For the initial physical design process, the AI generated image was basically useless and too generic which led me to go on my own for tinkering with the physical parts. The model didn’t seem to be able to handle troubleshooting that needed to visualize the robot in the real world and I had to manually adjust the code after a lot of back and forth.

Some questions remain with me after trying this experiment. The first draws on the fact that I had a strong idea and vision for the design at the outset. I wonder what it would have been like without that and how helpful the AI could be to generate initial ideas. As well, I have worked a lot with micro:bit, servo motors, breadboards, switches and cardboard so I had a foundation to build on and an instinct for a progression of testing the model (even though I couldn’t have figured out many of the coding solutions. I had a language to describe how the robot was not working and could communicate that to the chatbot. I wonder what this experience would be like for someone who was just starting out with these physical materials and digital concepts.

I think that I’m surprising myself with coming up with new ways of imagining how to use chatbots in the tinkering process both to create workshop/exhibit elements as well as letting learners to explore their own ideas. There are other complex art machines that I would like to pay around with as well as possible directions that I could imagine for sound experiments, digital automata and marble machine projects. I would also like to return to some of the ideas I explored a few years ago around a network of micro:bit elements communicating with each other. I’m not too convinced by the content that AI produces in the digital world (animation and graphics) but as a helper for digital side of computational tinkering projects I think there are a lot of interesting possibilities to explore and ways to give learners confidence to try new things.